Lab 4: Wizard-of-Oz GUI for Misty

Learning Goals

- Students will develop a Wizard-of-Oz (WoZ) GUI interface that enables a human experimenter to control Misty in real-time during a human-robot interaction.

- Students will expand Misty's expressive capabilities by creating GUI buttons for speech, gestures, LED changes, and other behaviors.

Lab Overview

In this lab, you will build a GUI interface that allows a human to control Misty using the lab_4_misty_woz_gui.py starter code from Lab 4 GitHub repository. The goal is to simulate Misty's conversational abilities and non-verbal behaviors in a controlled setting.

The interface will include:

- Pre-scripted utterance buttons for things like greetings, expressions of gratitude, and transitions.

- A text entry box and send button for generating custom speech via Misty's speaker.

- Custom control buttons for Misty's movements, facial displays, LED colors, arm gestures, audio clips, and more.

Starter Code

Begin by downloading or cloning lab_4_misty_woz_gui.py from the Lab 4 GitHub repository.

ModuleNotFoundError: No module named 'PIL', you'll need to install the Pillow package in your virtual environment with:

pip install Pillow

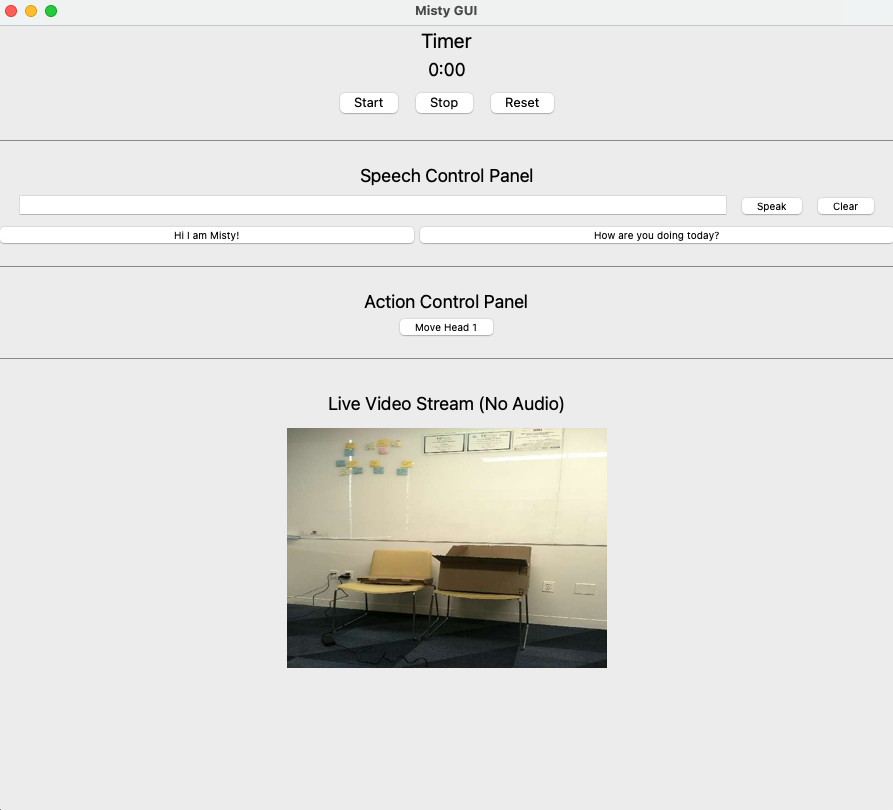

When you run the starter code (python3 lab_4_misty_woz_gui.py ), you will see the following starter GUI:

Within the starter code, you are asked to:

- Add additional buttons for pre-scripted speech in the GUI.

- Create custom buttons for enabling Misty to have nonverbal and animated responses to human speech (e.g., nodding, expressing surprise) using Misty's driving, LED lights, facial display, arms, audio playback, etc.

- Include new if-statements to handle these new button actions in the backend logic.

Working in Groups

You will have new group assignments for this lab that have been assigned based on participation in the research study, and then randomly within those who did and did not consent to participate. Each group will turn in one piece of code for their GUI design.

Lab 4 Deliverables & Submission

Your GUI must include:

- A control panel of pre-scripted message buttons that enables the robot to execute all of the main speech actions required for the "Three Good Things" exercise.

- A text input field + submit button for the Wizard to input dynamic (non-prescripted) speech for the robot to say.

- At least 4 robot social reaction buttons where each button allows the robot to express a nonverbal behavior (e.g., nodding) or social reaction (e.g., vocal backchannel, expression of surprise). These can leverage the robot's LED, movement, arms, and/or display.

We ask you to turn in the following:

- A video recording of your GUI in action with the robot. This video should have both the Wizard-of-Oz interface and robot in the frame and showcase ONLY the first round of the "Three Good Things" exercise (robot disclosure, human disclosure, and robot response).

- Your updated source code:

lab_4_misty_woz_gui.py

Submit both to Canvas by Friday, April 18 at 6:00pm.

"Three Good Things" Interaction Flow

As we introduced in Lab 3, you will be programming the Misty robot to act as a facilitator of a positive psychology exercise called the "Three Good Things." This exercise is a well-known positive psychology intervention that has been shown to improve overall mental health and well-being. In this exercise, both the robot and the participant will take turns sharing three things that they are grateful for that have happened to them in the past week. Here's how the interaction should flow between the robot and human participant and which parts should be pre-scripted vs. dynamic for the wizard:

- Introduction (pre-scripted): The robot introduces itself and the "Three Good Things" exercise to the human participant. While optional, you can include some back-and-forth here between the human and robot (including options for dynamic input) if you'd like, such as asking for the participants name, asking the participant how they're doing, etc.

- Robot Disclosure #1 (pre-scripted): The robot will start the exercise by sharing one thing that it is grateful for. Then, it will prompt the participant to share one thing they're grateful for.

- Participant Disclosure #1: The human participant shares one thing they are grateful for.

- Robot Response to Participant Disclosure #1 (dynamic): The robot responds in 1 sentence (or so) to what the human participant has shared.

- Robot Disclosure #2 (pre-scripted): The robot shares a second thing it is grateful for.

- Participant Disclosure #2: The human participant shares a second thing they are grateful for.

- Robot Response to Participant Disclosure #2 (dynamic): The robot responds to what the human participant has shared.

- Robot Disclosure #3 (pre-scripted): The robot shares a third thing it is grateful for.

- Participant Disclosure #3: The human participant shares a third thing they are grateful for.

- Robot Response to Participant Disclosure #3 (dynamic): The robot responds to what the human participant has shared.

- Robot Conclusion (pre-scripted): The robot concludes the interaction, thanks the participant for their participation, and says goodbye.

Tips & Resources

- Refer back to your Lab 3 knowledge about Misty's Python API: speech, movement, display, LEDs, etc.

- Refer to online documentation of

tkinterto create the GUI. - Keep the interface simple and usable for real-time interaction.

- Check the Misty API references:

Extra Challenge

For an additional challenge, consider adding:

- Keyboard shortcuts for quick triggering of behaviors

- A simple visual log showing which commands were issued and when